Recording of the interview I gave at SAP Tech-Ed Amsterdam, talking about my experiences with SAP NetWeaver Gateway and Duet Enterprise, SAP Customer Engagement Initiative programs, and the recent released NetWeaver Gateway Productivity Accelerator for Microsoft (GWPAM).

Sunday, November 17, 2013

Friday, November 1, 2013

Tip: activate Gateway Metadata cache to resolve NullPointer / CX_SY_REF_IS_INITIAL error

For a customer we are installing the myHR suite from Cordis Solutions to deliver ESS/MSS functionality within SharePoint application. The myHR suite is a SAP-certified Duet Enterprise product. As part of the project we first deploy the prerequisite supporting platform elements SAP NetWeaver Gateway 2.0 and Duet Enterprise for SharePoint and SAP. And next install the myHR suite. We do this across the customer landscapes of development/test, acceptance and production environments. Each environment installation ends with technical validation of the environment: Gateway, Duet Enterprise, Cordis myHR.

Per technical validation of the environments, I encounter the issue that the first 2 to 3 invocations for each of the myHR Duet Enterprise services returns the error CX_SY_REF_IS_INITIAL. After 3 service invocation calls, each myHR service successfully returns the requested SAP data. The explanation of this behavior is a kind of ‘warm-up’ effect: the Duet Enterprise services installed in the SAP Gateway system are not yet compiled / generated until first invocation, resulting in the CX_SY_REF_IS_INITIAL error on the Gateway system, and NullPointer exception in the invoking SharePoint UI-context. After a few invocation attempts, the service components are generated and available at runtime in the SAP system, and the invocation from there on gets successful the SAP data result.

Last week upon technical validation of the acceptance environment this symptom again manifested it as I expected. However, to my surprise the warm-up effect was not effectuated. All service invocations remained in erroneous state. Via ABAP debugging, I traced the error location. The problem originated at internal SAP Gateway method 'GET_META_MODELS' to instantiate the metadata model of the invoked service. I compared the runtime behavior on the faulty system versus an environment that has successfully be ‘warmed-up’. The difference was that on the latter system, the method to create the metadata model was not even reached. Well, not anymore… It was not needed to create the metadata model, because it could be retrieved from the Gateway Metadata cache.

This appeared the problem cause why the warm-up effect was not applied: at SAP Gateway deployment, one had forgot to activate the Gateway Metadata cache in this environment. After correcting that, the myHR services do start returning data. Well, that is... from each 3rd invocation on...

Labels:

Duet Enterprise,

Interoperability,

NetWeaver Gateway

Monday, October 28, 2013

GWPAM - SAP data direct in Microsoft Office client-applications

Flagship of the Duet Enterprise / Gateway product team is Duet Enterprise for Microsoft SharePoint and SAP. Customers are very satisfied with the functionality and capabilities provided by this integration product, and the demonstrated product stability. A frequently asked question is to provide this level of exposing SAP data + processes also for use in Microsoft applications beyond SharePoint. The product team has responded to this market demand. Last week at SAP TechEd 2013 in Las Vegas, SAP NetWeaver Gateway Productivity Accelerator for Microsoft has been launched, shortly referred to as GWPAM.

As participant in the Duet Enterprise Customer Engagement Initiative (CEI) program, I was involved from the early development stage of GWPAM (under the internal codename BoxX). On request of the Duet Enterprise product team I performed so-called Takt-Testing, and reported my technical and functional thoughts + findings. Good to see that aspects of my feedback - predominantly influenced by my own technical background as an .Net architect/developer - have actually made it within the final product.

Like it’s big brother, GWPAM is in essence an end-product for the IT organization. It is an integration framework that internal IT departments and SAP + Microsoft partners (the ecosystem) can utilize, to build their own scenarios in which SAP / Microsoft integration is an important architectural element. GWPAM provides a Microsoft Visual Studio AddIn that .Net developers can use to directly in their familiair integrated development environment, lookup SAP Gateway OData services. And generate proxies to the Gateway OData services with standard .Net code.

The first foreseen scenarios are Microsoft Office Add-In’s, to expose and integrate the SAP business data in the everyday used Microsoft Office clients. For example, SAP CRM customer data in the form of Outlook contacts, invoice approval requests as Outlook tasks, functional data management of SAP masterdata through Excel, BW report data rendered in PowerPoint, and submit SAP CATS timetracking directly from your Outlook Calendar ...

The first foreseen scenarios are Microsoft Office Add-In’s, to expose and integrate the SAP business data in the everyday used Microsoft Office clients. For example, SAP CRM customer data in the form of Outlook contacts, invoice approval requests as Outlook tasks, functional data management of SAP masterdata through Excel, BW report data rendered in PowerPoint, and submit SAP CATS timetracking directly from your Outlook Calendar ...

Like Duet Enterprise for SharePoint, GWPAM provides support for the typical and recurring plumping aspects of SAP/Microsoft integration: Connectivity, Single Sign-on, End-2-End monitoring, .Net development tooling, integration with SAP Solution Manager. GWPAM offers a complete SAP / Microsoft integration package.

As with Duet Enterprise, the two suppliers have their collective strength and market presence behind this new product offering. This is also a major distinction compared to the various proprietary connectors of smaller parties.

As with Duet Enterprise, the two suppliers have their collective strength and market presence behind this new product offering. This is also a major distinction compared to the various proprietary connectors of smaller parties.

As SAP / Microsoft interoperability expert, I am enthousiast about the addition of GWPAM to the SAP / Microsoft integration spectrum. GWPAM enables to build a new category of functional scenarios for end-customers. Now also for organizations that do not have SharePoint in their application landscape, but do have Microsoft Office installed on the desktops. And want to utilize that familiar employee environment for user intuitive operation of SAP data and business processes.

Saturday, September 28, 2013

Avoid corrupted site columns due feature re-activation

One of our clients reported an issue that provisioned site columns get corrupted after deinstall of the provisioning feature. The feature deinstallation is a step within the repair of erroneous situation in which sandbox solution per accident is also deployed as global farm-based solution. This incorrect deployment requires a fixture because an effect is that features now appear as duplicate entries in the list of site(collection) features: one installed via the sandbox solution (correct, administrated in the sitecollection's content database), and one installed via the farm solution (incorrect, administrated in the farm configuration database).

On first thought the simple fix is to deactivate the provisioning feature that originates from the farm deployment, remove the feature, then retract the global farm-solution, and remove it from the farm solution store. Next activate the feature deployed via sandbox-solution to arrive at the correct deployment situation. However, in a test execution we experienced that this approach gives an error upon the re-provisioning of the site columns: The local device name is already in use.

Error details in ULS log:

Unable to locate the xml-definition for FieldName with FieldId '<GUID>', exception: Microsoft.SharePoint.SPException: Catastrophic failure (Exception from HRESULT: 0x8000FFFF (E_UNEXPECTED)) ---> System.Runtime.InteropServices.COMException (0x8000FFFF): Catastrophic failure (Exception from HRESULT: 0x8000FFFF (E_UNEXPECTED))

at Microsoft.SharePoint.Library.SPRequestInternalClass.GetGlobalContentTypeXml(String bstrUrl, Int32 type, UInt32 lcid, Object varIdBytes)

at Microsoft.SharePoint.Library.SPRequest.GetGlobalContentTypeXml(String bstrUrl, Int32 type, UInt32 lcid, Object varIdBytes)

To come with a solution, I started with a root-cause analysis. Why are the provisioned site columns not completely deleted from the site upon its feature deactivation? The explanation is that the provisioning feature also performs a contenttype binding to the Pages library, and that in our testcase a page was created based on that contenttype. This effectually results in the contenttype being kept ‘in usage’ by the Pages library. On feature deactivation the site columns can still be removed at sitecollection level, but the contenttype not anymore due the descendant sibling binded to the Pages library.

The real problem however lies in the 'removed' site columns. They are deleted from sitecollection level, but due the preserved contenttype (Pages library) their definition has remained in the sitecollection's content database, with the same ID as on sitecollection level (note this is different for a contenttype binded to a list, that gets a new ID based on the ID of the source/parent contenttype at sitecollection). SharePoint administrates per provisioned artifact whether the origin is a feature, and if so effectively couples the artifact to that feature. SharePoint disallows these artifacts by automatically deleted or modified by another feature. As result the feature re-activation halts with an error when trying to (re)provision the sitecolumns that are still present deep down in the content database, coupled to the Pages library.

With this SharePoint-internal insight, I was able to come up with a faultproof approach to fix the 'duplicate features' issue. The trick is to initial leave the feature definition that originated from the erroneous farm solution in the configuration database, deploy the sandbox-solution (stores the feature definition in the sitecollection's content database), activate the feature with the same feature id from that sandbox solution. Now the feature activation proceeds completely without errors, and restores the site columns. Ultimately the farm-based solution can then be retracted from the farm solution store.

Note: I came to this insight by inspecting on SQL level. We all know it is not allowed to perform changes on SharePoint content database level (or loose your Microsoft support), but it is perfectly ‘SharePoint’-legal to review and monitor on SharePoint content database level.

Used/useful SQL statements:

SELECT * FROM (

SELECT *, Convert(varchar(512), CONVERT(varbinary(512), ContentTypeId), 2) As key FROM [ContentTypeUsage]) as T

where key like '%< contenttypeid >%'

SELECT tp_Title FROM ( SELECT *, Convert(varchar(512), CONVERT(varbinary(512), ContentTypeId), 2) As key FROM [ContentTypeUsage] Join AllLists on ContentTypeUsage.ListId = AllLists.tp_ID ) as T where key like '%< contenttypeid >%'

Labels:

Content Database,

Content Type,

Feature,

Provision,

SharePoint,

SiteColumn

Saturday, August 31, 2013

Applying SharePoint FAST for unlocking SAP data

An important new mantra is search-driven applications. In fact, "search" is the new way of navigating through your information. In many organizations an important part of the business data is stored in SAP business suites. A frequently asked need is to navigate through the business data stored in SAP, via a user-friendly and intuitive application context. For many organizations (78% according to Microsoft numbers), SharePoint is the basis for the integrated employee environment.

Starting with SharePoint 2010, FAST Enterprise Search Platform (FAST ESP) is part of the SharePoint platform. All analyst firms assess FAST ESP as a leader in their scorecards for Enterprise Search technology. For organizations that have SAP and Microsoft SharePoint administrations in their infrastructure, the FAST search engine provides opportunities that one should not miss.

SharePoint Search

Search is one of the supporting pillars in SharePoint. And an extremely important one, for realizing the SharePoint proposition of an information hub plus collaboration workplace. It is essential that information you put into SharePoint, is easy to be found again. By yourself of course, but especially by your colleagues. However, from the context of 'central information hub', more is needed. You must also find and review via the SharePoint workplace the data that is administrated outside SharePoint. Examples are the business data stored in Lines-of-Business systems [SAP, Oracle, Microsoft Dynamics], but also data stored on network shares.With the purchase of FAST ESP, Microsoft's search power of the SharePoint platform sharply increased. All analyst firms consider FAST, along with competitors Autonomy and Google Search Appliance as 'best in class' for enterprise search technology. For example, Gartner positioned FAST as leader in the Magic Quadrant for Enterprise Search, just above Autonomy. In SharePoint 2010 context FAST is introduced as a standalone extension to the Enterprise Edition, parallel to SharePoint Enterprise Search. In SharePoint 2013, Microsoft has simplified the architecture. FAST and Enterprise Search are merged, and FAST is integrated into the standard Enterprise edition and license.

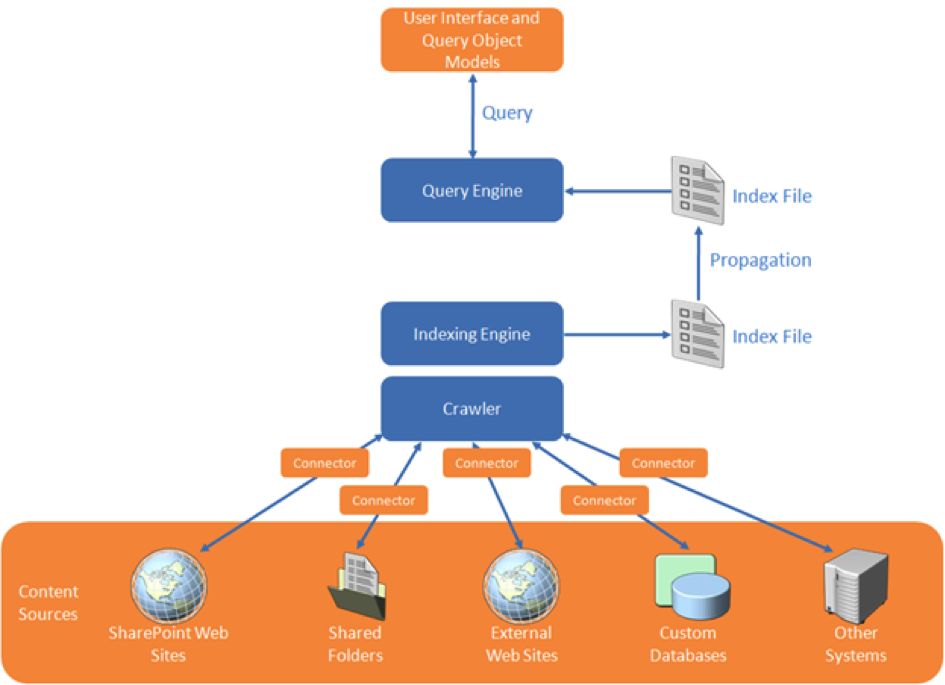

SharePoint FAST Search architecture

The logical SharePoint FAST search architecture provides two main responsibilities:- Build the search index administration: in bulk, automated index all data and information which you want to search later. Depending on environmental context, the data sources include SharePoint itself, administrative systems (SAP, Oracle, custom), file shares, ...

- Execute Search Queries against the accumulated index-administration, and expose the search result to the user.

In the indexation step, SharePoint FAST must thus retrieve the data from each of the linked systems. FAST Search supports this via the connector framework. There are standard connectors for (web)service invocation and for database queries. And it is supported to custom-build a .NET connector for other ways of unlocking external system, and then ‘plug-in’ this connector in the search indexation pipeline. Examples of such are connecting to SAP via RFC, or ‘quick-and-dirty’ integration access into an own internal build system.

In this context of search (or better: find) in SAP data, SharePoint FAST supports the indexation process via Business Connectivity Services for connecting to the SAP business system from SharePoint environment and retrieve the business data. What still needs to be arranged is the runtime interoperability with the SAP landscape, authentication, authorization and monitoring. An option is to build these typical plumping aspects in a custom .NET connector. But this not an easy matter. And more significant, it is something that nowadays end-user organizations do no longer aim to do themselves, due the involved development and maintenance costs.

An alternative is to apply Duet Enterprise for the plumbing aspects listed. Combined with SharePoint FAST, Duet Enterprise plays a role in 2 manners:

(1) First upon content indexing, for the connectivity to the SAP system to retrieve the data. The SAP data is then available within the SharePoint environment (stored in the FAST index files). Search query execution next happens outside of (a link into) SAP.

(2) Optional you'll go from the SharePoint application back to SAP if the use case requires that more detail will be exposed per SAP entity selected from the search result. An example is a situation where it is absolutely necessary to show the actual status. As with a product in warehouse, how many orders have been placed?

(1) First upon content indexing, for the connectivity to the SAP system to retrieve the data. The SAP data is then available within the SharePoint environment (stored in the FAST index files). Search query execution next happens outside of (a link into) SAP.

(2) Optional you'll go from the SharePoint application back to SAP if the use case requires that more detail will be exposed per SAP entity selected from the search result. An example is a situation where it is absolutely necessary to show the actual status. As with a product in warehouse, how many orders have been placed?

Security trimmed: Applying the SAP permissions on the data

Duet Enterprise retrieves data under the SAP account of the individual SharePoint user. This ensures that also from the SharePoint application you can only view those SAP data entities whereto you have the rights according the SAP authorization model. The retrieval of detail data is thus only allowed if you are in the SAP system itself allowed to see that data.Due the FAST architecture, matters are different with search query execution. I mentioned that the SAP data is then already brought into the SharePoint context, there is no runtime link necessary into SAP system to execute the query. Consequence is that the Duet Enterprise is in this context not by default applied.

In many cases this is fine (for instance in the customer example described below), in other cases it is absolutely mandatory to respect also on moment of query execution the specific SAP permissions. The FAST search architecture provides support for this by enabling you to augment the indexed SAP data with the SAP autorisations as metadata.

To do this, you extend the scope of the FAST indexing process with retrieval of SAP permissions per data entity. This meta information is used for compiling ACL lists per data entity. FAST query execution processes this ACL meta-information, and checks each item in the search result whether it allowed to expose to this SharePoint [SAP] user.

This approach of assembling the ACL information is a static timestamp of the SAP authorizations at the time of executing the FAST indexing process. In case the SAP authorizations are dynamic, this is not sufficient.

For such situation it is required that at the time of FAST query execution, it can dynamically retrieve the SAP authorizations that then apply. The FAST framework offers an option to achieve this. It does require custom code, but this is next plugged in the standard FAST processing pipeline.

SharePoint FAST combined with Duet Enterprise so provides standard support and multiple options for implementing SAP security trimming. And in the typical cases the standard support is sufficient.

Applied in customer situation

The above is not only theory, we actually applied it in real practice. The context was that of opening up of SAP Enterprise Learning functionality to operation by the employees from their familiar SharePoint-based intranet. One of the use cases is that the employee searches in the course catalog for a suitable training. This is a striking example of search-driven application. You want a classified list of available courses, through refinement zoom to relevant training, and per applied classification and refinement see how much trainings are available. And of course you also always want the ability to freely search in the complete texts of the courses.In the solution direction we make the SAP data via Duet Enterprise available for FAST indexation. Duet Enterprise here takes care of the connectivity, Single Sign-On, and the feed into SharePoint BCS. From there FAST takes over. Indexation of the exposed SAP data is done via the standard FAST index pipeline, searching and displaying the search results found via standard FAST query execution and display functionalities.

In this application context, specific user authorization per SAP course elements does not appy. Every employee is allowed to find and review all training data. As result we could suffice with the standard application of FAST and Duet Enterprise, without the need for additional customization.

Conclusion

Microsoft SharePoint Enterprise Search and FAST both are a very powerful tool to make the SAP business data (and other Line of Business administrations) accessible. The rich feature set of FAST ESP thereby makes it possible to offer your employees an intuitive search-driven user experience to the SAP data.

Labels:

Duet Enterprise,

Enterprise Search,

FAST,

SAP,

SharePoint 2010

Thursday, August 22, 2013

Powershell to list all site collections in farm with feature activated from ‘Farm’ definition scope

In SharePoint 2010, a Feature can be installed from a farm solution and from a sandbox solution. In case of farm solution, the Feature is installed on farm-level, and dependent on the feature scope, visible (unless hidden) for all webapplications, site-collections or webs to activate (by GUI, Powershell and yes, even stsadm). In case of sandboxed solution, only possible for scope = Site or Web, the feature is only visible within the site-collection, and can also only be activated in the site-collections to which added and activated.

Today we encoutered a situation in which a sandboxed solution was per accident also deployed on the farm. The result was that features within the solution were installed and visible twice in each site collection to which it also was added as sandbox. The remedy is to retract the per-accident farm deployment. But we must take into account that features from the SharePoint solution may have been activated from the farm-deployed version.

Powershell enables us to easily determine in which site collections in the farm the feature is activated from the farm-deployed solution:

$snapin = Get-PSSnapin | Where-Object {$_.Name -eq 'Microsoft.SharePoint.Powershell'}

if ($snapin -eq $null) {

Add-PSSnapin "Microsoft.SharePoint.PowerShell"

}

$featureId = ".......-....-....-....-............";

$contentWebAppServices = (Get-SPFarm).services | ? {$_.typename -eq "Microsoft SharePoint Foundation Web Application"}

foreach($webApp in $contentWebAppServices.WebApplications) {

foreach($site in $webapp.Sites) {

Get-SPFeature -Site $site| where{$_.Id -eq $featureId}|%{

if ($($site.QueryFeatures($featureId)).FeatureDefinitionScope -match "Farm"); {

Write-Host $_.DisplayName " is Activated from Farm deployment on site : " $site.url

}

}

}

}

if ($snapin -eq $null) {

Add-PSSnapin "Microsoft.SharePoint.PowerShell"

}

$featureId = ".......-....-....-....-............";

$contentWebAppServices = (Get-SPFarm).services | ? {$_.typename -eq "Microsoft SharePoint Foundation Web Application"}

foreach($webApp in $contentWebAppServices.WebApplications) {

foreach($site in $webapp.Sites) {

Get-SPFeature -Site $site| where{$_.Id -eq $featureId}|%{

if ($($site.QueryFeatures($featureId)).FeatureDefinitionScope -match "Farm"); {

Write-Host $_.DisplayName " is Activated from Farm deployment on site : " $site.url

}

}

}

}

Labels:

Administration,

Powershell

Sunday, August 18, 2013

Evaluating SharePoint Forum products

At one of the organizations I consult, there is a business demand for forum functionality in their external facing websites. The organization has selected SharePoint as target architecture for webapplications, including public websites. SharePoint as platform itself contains DiscussionBoard as a (kind of) forum functionality, but this is not qualified for usage on external facing websites. Among its criticisms are the look & feel which is very ‘SharePoint-like', and not what endusers expect and typically are familiar with on public websites. Also DiscussionBoard lacks forum functionalities of moderation, sticky posts, avatars, lock a post, rich text editing, tagging, rating, vote as answer. And an important restriction for the usability on public websites: a SharePoint DiscussionBoard in practice requires authenticated users (ok, you can allow anonymous access, but as posting topics and answers occurs via SharePoint forms you then will have to give anonymous users access to layouts folder. Not a wise decision to make from security perspective).

One option would be to custom develop forum functionality that satisfies the extended business requirements. But because we regard forum as commoditiy functionality, this is not something we want to develop and maintain ourselves. Therefore instead I did a market analysis and evaluation for available SharePoint forum products. It appears a very tiny market, with only 5 market products found:

- TOZIT SharePoint Discussion Forum

- Bamboo Solutions Discussion Board Plus for SharePoint

- KWizCom Discussion Board feature

- LightningTools Storm Forums

- LightningTools Social Squared

In each product evaluation I addressed the following aspects:

- Product positioning by supplier (wide-scale internet usage, scaleability?)

- Installation of the product

- Effect of the installation on the SharePoint farm (assemblies, features, application pages, databases, …)

- Product documentation: installation manual, user / usage manual

- Functional Management: actions involved how-to provision a new forum

- Functional Usage: using a forum, in the roles of moderator and forum user

- User Experience and internet-ready

- Branding capabilities (CSS, clean HTML)

- Product support

For the requirement set of this customer, Social Squared turned out as the best fit. It has a rich forum feature set, on the same level as what you see in other (non-SharePoint) established internet forums. Also considered a strong point is that the forum administration is done in own SQL database(s), outside the SharePoint (content database). This allows to easily sharing a forum between multiple brands of the customer, each with their public presence in an own host-named site collection. And LightningTools is an well-known software products supplier, which gives the customer (IT) trust that the product will be adequatly and timely supported. As a demonstration of this: during my evaluation I already had extensive contact with their product support, answerring on questions and issues that I encountered, and also give me insight on the Social Squared roadmap.

Subscribe to:

Comments (Atom)