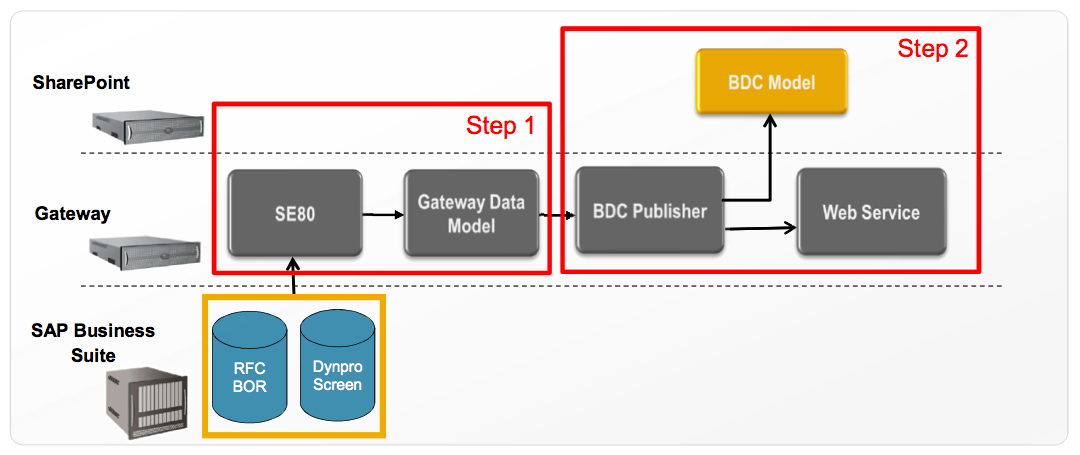

In Duet Enterprise FP1 it is relatively easy to generate a Gateway Model, as long as your SAP data sources live up to the required constraints. The challenge and intellectual work transfers to the data architecture, for deciding on and defining the proper data service interfaces given the application context.

Real-time retrieval versus Search indexing

Bringing SAP data into SharePoint based front-ends can take different forms. You can unlock the SAP data real-time via ExternalList, to display and even edit the SAP data via the familiar SharePoint List UI metaphor. If the row-based List format doesn’t fit, you can develop a custom SharePoint webpart. You can also access the SAP data via the extensive SharePoint Search architecture and capabilities. With the improved Enterprise Search in SharePoint 2010 [or FAST if also purchased by customer organization], more and more new applications will be architected as search-driven. In context of SAP data think of searching from SharePoint context for a certain Supplier administrated in PLM, a Customer in CRM, et cetera.Duet Enterprise can facilitate all of the above scenarios, in combination with the strengths of the SAP and SharePoint landscapes. However, the different nature of these scenarios may well result into different approaches for the data integration architecture. In the case of real-time retrieval of SAP data to render into SharePoint, it is advisable to limit the amount of data retrieved per SharePoint - Gateway - SAP backend interoperability flow. Don’t put unnecessary SAP data on the line that is not being used in the front-end: only include the fields of the SAP data that will be displayed in the UI and are relevant in this application context for the end-user. Search however has a different context. It is feasible to search on any of the field data of the SAP entity. This requires that all that SAP field data must be indexed upon SharePoint Search crawling time. So here it is advisable to not limit the amount of SAP data retrieved, but instead retrieve as much of the SAP data that expresses some functional value.

What if the same SAP data is to be unlocked both via SharePoint ExternalList, as via SharePoint Enterprise Search? The data integration architectures of these are thus contra dictionary: reduce the retrieved SAP data versus give me all. Well, nothing prohibits you from constructing multiple data integration pipelines, tuned for the different scenarios. For Search, it’s Gateway Model returns in the Query method all fields that contain functional value. And for the real-time ExternalList, that Gateway Model returns in the Query method only those fields that will be rendered as list columns.

In Duet Enterprise 1.0, constructing the Gateway Model takes considerable time. This is an obstacle for constructing multiple Gateway Models with different data representation / signature, as it can easily double your Gateway mapping effort and time. Luckily in Duet Enterprise FP1 it is relatively easy to generate a Gateway Model, as long as your SAP landscape data sources live up to the required constraints.

Complex SAP data source structure

If the SAP data is conceptually a flat structure, it is sufficient to have a single Gateway Model to retrieve the data into SharePoint context. However, we all know that SAP (or, business) data is typically of a more complex structure: hierarchical with multiple child entities. When you want to retrieve such a structure into SharePoint, you basically have 2 options.The first is to flatten the structure. This approach suffers from some disadvantages. In case of multiple occurrences of the same child type (e.g. Ordered Item); how many of them should you include in the flattened representation? All, or an arbitrary limited number? Also, for the end-user a flattened structure can be conceptually wrong and counter-intuitive: Ordered Item information is of different functional level as the Purchase Order information.

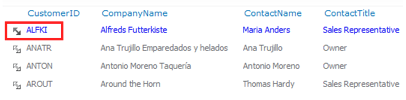

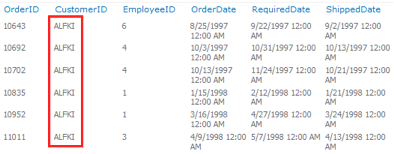

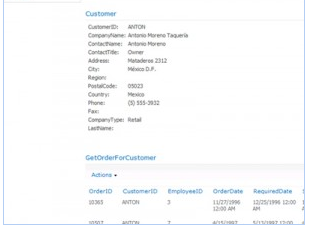

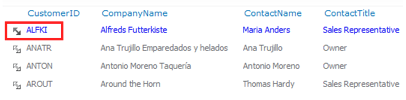

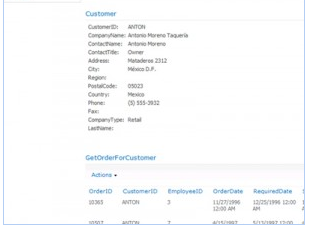

The second option is to maintain the hierarchical SAP structure within the Gateway Model architecture. For this you need to generate a Gateway Model for the parent SAP data entity, as for each type of its child data entities. At SharePoint client-side you associate the 2 resulting External ContentTypes, to establish the parent-child relation. SharePoint BCS respects the association between the External ContentTypes when it operates on the data. In case of SharePoint search, the child-associations are crawled in the context of the parent data, and either the parent or child SAP data entity will appear in search results. The BCS Profile page of a parent data entity renders also the data of its child entities.

In case of real-time retrieval, you can apply the BCS Business Data webparts: add to the SharePoint page both a Business Data WebPart for the parent entity and a Business Data Related List WebPart for the child data entities. The latter will display the child SAP data entities of the parent SAP data entity that is selected in the Business Data List web part.