The last 6 years I have focussed on utilizing SharePoint as additional channel to expose SAP data and operate SAP functionalities. As I am now about to start another challenge in the broad SharePoint arena, I decided to write down my advice on how-to achieve such SAP/SharePoint integration. What approach to take, architectural advice, and what steps. On intent I do not elaborate on technology products, as these by nature are only temporarily. Instead I focus my outline on concepts.

Rough outline of approach

- Establish the reference solution architecture for integrating SAP business process handling into SharePoint front-end / UI

- Establish which of the business processes to expose via SharePoint as additional channel, versus which to keep solely through SAP UI channel

- Establish the IST situation: Business Process definitions and supportive landscape, Enterprise IT strategy + roadmap, IT landscape.

- Establish the SOLL situation.

- Determine the IST-SOLL gap

- Map the solution architecture + IST-SOLL analysis onto concrete technology: Microsoft and SAP.

- Architect, design, develop, test and implement specific scenario(s).

Architecture guidelines

- Apply a layered architecture

- Loosely-connected front-end / back-office system(s) landscape through the usage of interfaces (contracts)

- Interoperability standards based: webservices, security standards, monitoring

- Abstract the LOBsystem business process handling as a contract-specification

- Extend the decouped operation in alternative front-end with the concept of Operational Data Store to enable temporary administration outside the external LOB system, and allow complete preparation of administrative action before submitting into the external Lines of Business system.

Main steps for a specific scenario

Per specific scenario / use case to expose external LOB system into SharePoint- Identify the data and functionalities of the external LOB system that you want to expose via SharePoint as (additional) channel for user operation.

- Identity in the platform of the external LOB system context, the building blocks (functional and technical) that can be used to operate the identified data and functions. The external LOB system, and its internal working, is a (magical) blackbox for the unknowingly. You need the aid of a knowledgable business analyst to help with identification of the proper functional building blocks, and how to utilize and invoke them.

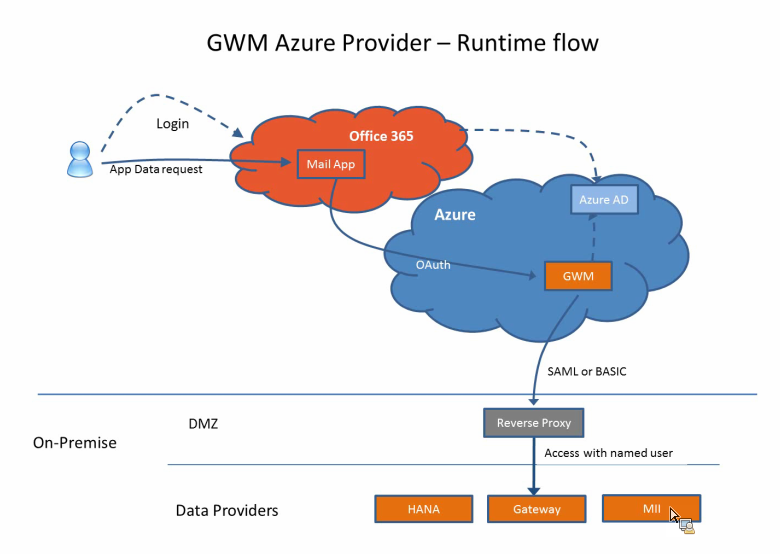

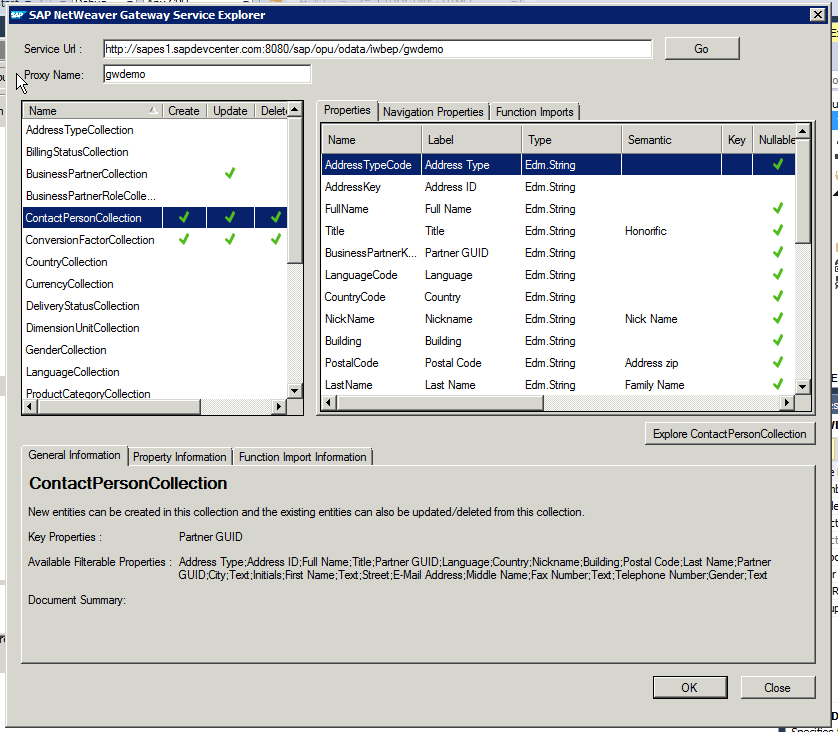

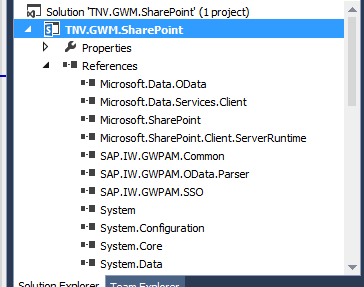

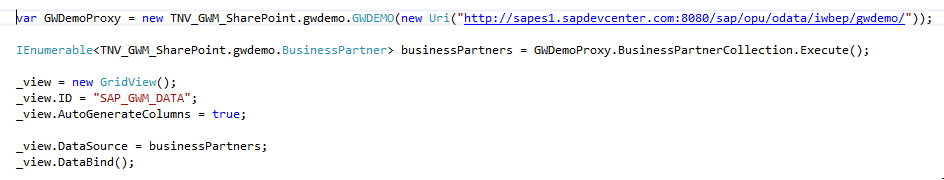

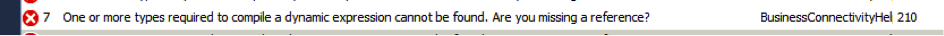

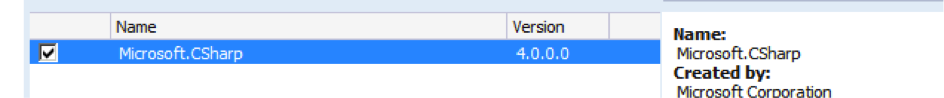

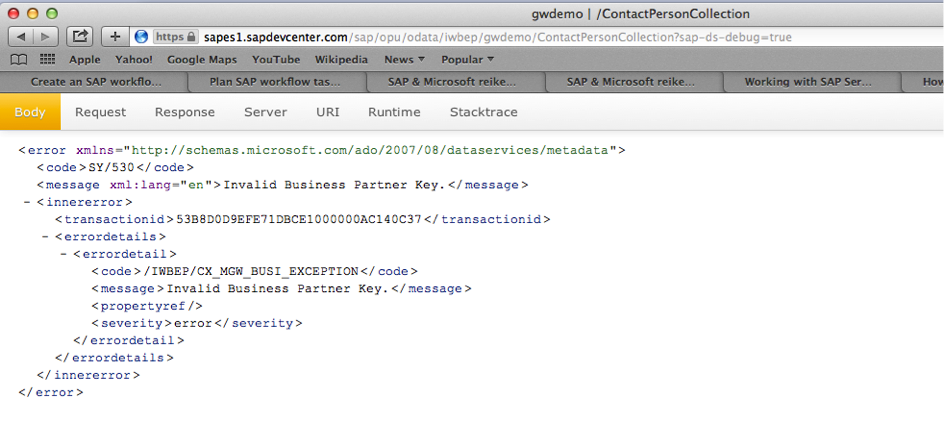

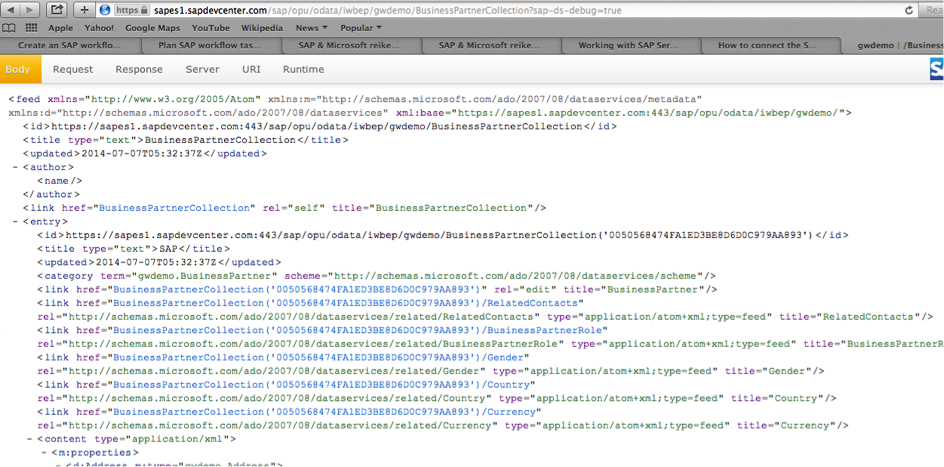

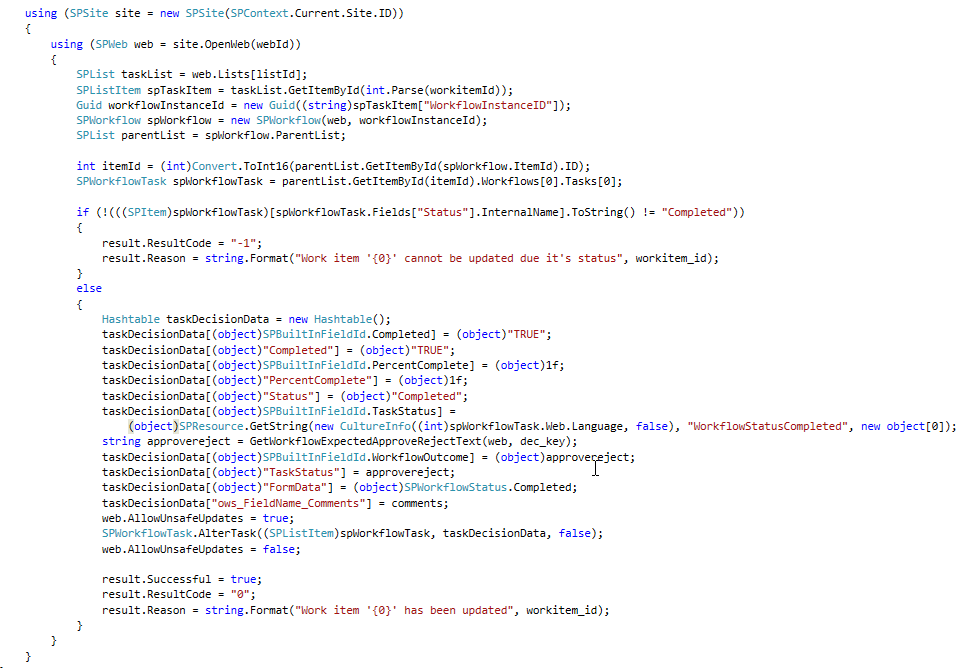

- Service-enable the identified building blocks. Don’t be fooled by the concept of SAP Enterprise Services. In practice, these are only usable in a (SAP) laboratory scope, not in the concrete situation of an end-organization. In reality you need to provide an own service layer to expose the identified building blocks. SAP has acknowledged this, and provides multiple supporting foundations and building blocks. In my opinion, current the most significant is SAP Gateway to expose SAP data and processes as REST services. For consumption in Microsoft clients, SAP delivers Duet Enterprise and Gateway for Microsoft that extend on the basic interoperability capabilities of SAP Gateway. In addition to SAP, frameworks of other suppliers are also available on the market to service-enable SAP. A disadvantage of them is that it may complicate your IT landscape. Trend of latest years in end-organizations is to consolidate on SAP + Microsoft IT policy

%2B-%2BHigh-Level%2BArchitecture.png)