Wednesday, December 12, 2018

Unpredictable and therefore unreliable crawling of User Profiles in SharePoint Online

Friday, November 30, 2018

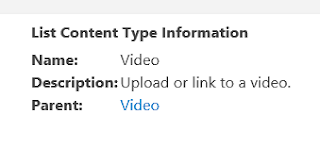

Beware: using Asset library for video capability failure due missing feature activation

| Incomplete content type chain | Correct content type chain |

|

|

- The “Video” content type on library level still misses the essential site columns of “Video”

- Uploading video files even results in SharePoint throwing an error.

Tuesday, November 27, 2018

Beware: Azure AD B2B guests inconsistent resolved in PeoplePicker

Wednesday, October 31, 2018

Beware: governance of SharePoint site underneath MS Teams largely gone

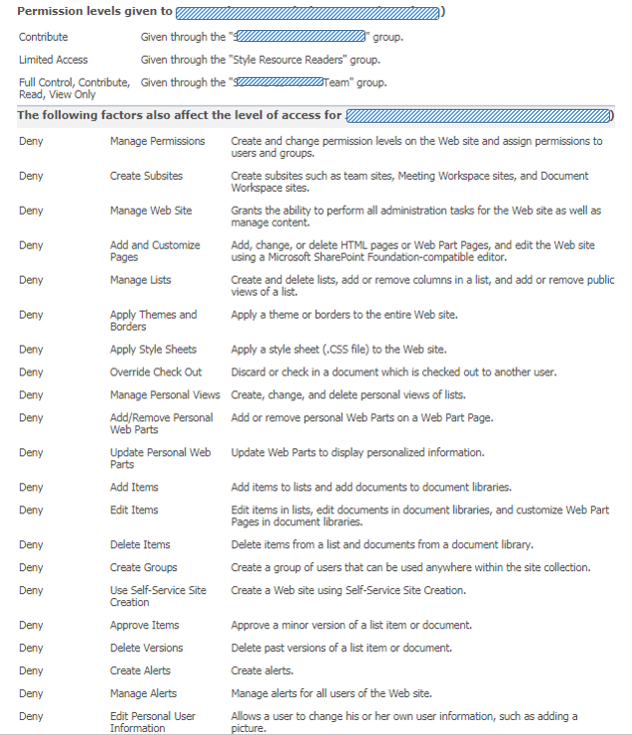

- The powerful authorizations via Site Collection Administrator (SCA) role is reserved to IT support only; and business users are authorized via SharePoint Groups + Permission Levels (see e.g. Site Owner vs Site Collection Administrator)

- Pre-defined site structures (in the old days via Site Definitions; nowadays via Site Templates, provisioning code (e.g. PnP provisioning))

- Organization consistent branding of the sites: logo, site classification, layout, ...

- Naming conventions for site titles and URLs

- Version Control + Content Approval policies

- Metadata (Managed + Folksonomy)

- Controlled availability of SharePoint Designer, enabling the business power-users to self-create workflows, customize views, create structure, ...

- Prerequisites imposed on the site requestor, checked upon by the helpdesk handling site provision process

- Lifecycle model

- ...

Saturday, September 22, 2018

Utilize Azure Function to resolve lack of CORS aware within SharePoint Online active authentication flow

Sunday, September 16, 2018

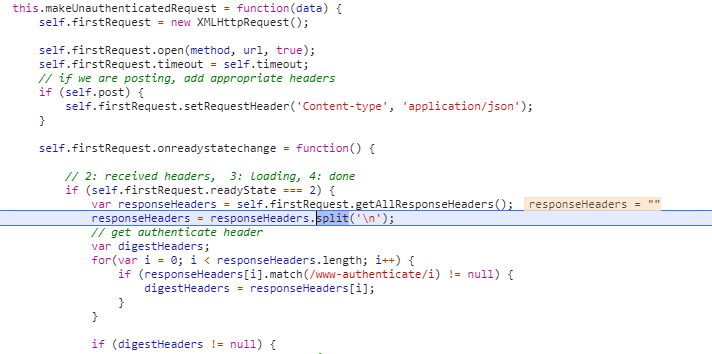

Digest Authenticated API should obey to CORS

Wednesday, August 22, 2018

Resolve from conflict between 'Content-Approval' and SharePoint Workflow

- Generic list with 'Content Approval' and 'Versioning' configured;

- SharePoint Designer Workflow on ItemChange; in which the approval status of item is checked, and on condition of 'Approved' create a copy of the item in the 'publish' location.

- The execution of the workflow triggered on ItemChange, results itself that the reached workflow stage is administrated as 'metadata' in the item on which the workflow is triggered.

- And although the actual content of the item remains unchanged, the standard 'Content Approval' handling treats this as change towards the stage in which the data item was approved; and automatically resets the approval state to 'Pending';

- When in the workflow the 'Approval Status' field is retrieved, it is therefore already reset to 'Pending'.

Thursday, July 12, 2018

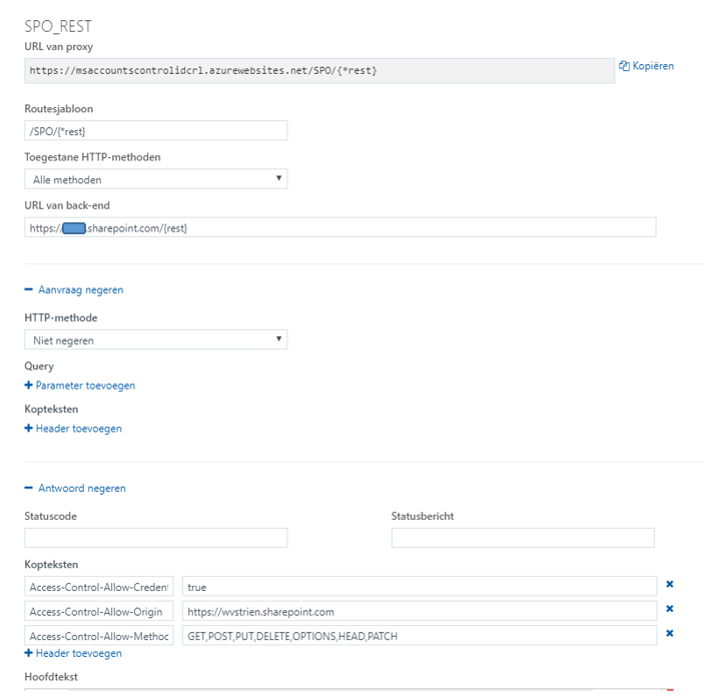

Utilize Azure Function Proxy to resolve lack of CORS aware within passive OAuth authentication flow

Augment the response to be CORS-aware

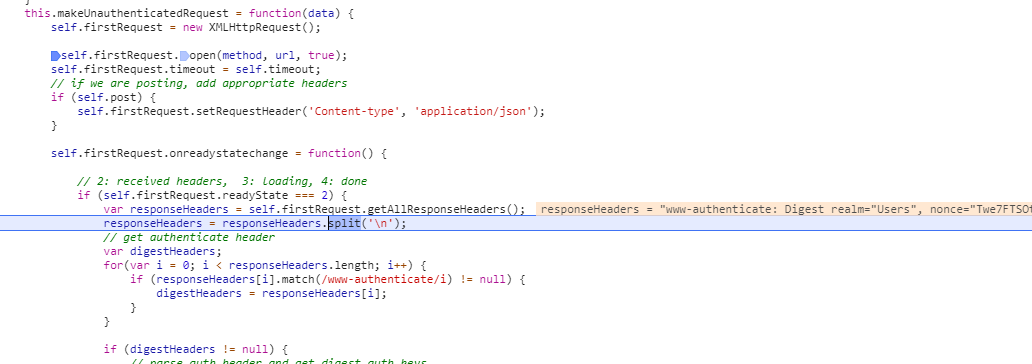

For browsers to accept the cross-domain OAuth authentication flow, solution is to modify the received response such that it is augmented with the needed CORS headers. In a first attempt, I tried to augment the response via overriding the (methods of the) XMLHttpRequest object in JavaScript. But not surprisingly this fails: the browser built-in Cross-Origin protection inspects the HTTP response on native level, and cannot be deceived by manipulating the received HTTP response within JavaScript runtime context. From security perspective this makes sense, otherwise the Cross-Origin protection could easily be avoided (seduced) in malicious code.Tuesday, June 12, 2018

Inject dynamic-filtering into classic-mode ListView

Sunday, May 20, 2018

Authenticate from Curl into SharePoint Online with Modern Authentication

#General variables

ProxyAccount="sa-curlAccount"

ProxyPassword="******************"

ProxyProtocol="http"

ProxyServer="xxx.xxx.xxx.xxx"

ProxyPort="8080"

SharePointCurlAccount="sa-curlAccount"

SharePointOnlineTenant="<URL of SharePoint Online tenant>"

UploadFile="<file to upload>"

UploadLocation="<URL of SharePoint Document Library>"

#Fixed variables

OUTPUT=${HOME}/Interop/output

TMP=${HOME}/Interop/tmp/spo

#the following steps are required to upload data from Curl context to SharePoint Online:

#

#1. Retrieve an authentication cookie to Office 365 through invocation of webservices

#1.a. (Optional) Step 0: determine the URL of the custom Security Token Service (STS) to next

# request a SAML:assertion for account identified by credentials

#1.b. Step 1: request SAML:assertion from the identified custom STS for account identified by

# credentials

#1.c. Step 2: use the SAML:assertion to request binary security token from Office 365

#1.d. Step 3: use the binary security token to retrieve the authentication cookie

#2. Step 4: Use that Office 365 authentication cookie in subsequent webservice requests to

# SharePoint Online REST API

#1.a. (Optional) Step 0: determine the URL of the custom Security Token Service (STS) to next

# request a SAML:assertion for account identified by credentials (outside datacenter, with proxy)

curl -U ${ProxyAccount}:${ProxyPassword} -k -x ${ProxyProtocol}://${ProxyServer}:${ProxyPort} -X POST -H "Content-Type: application/x-www-form-urlencoded" -d "login=${SharePointCurlAccount}&xml=1" https://login.microsoftonline.com/GetUserRealm.srf -w "\n" > ${TMP}/O365_response_step_0

#Extract requested STSAuthURL from response step 1

STSURL=`sed -n 's:.*<STSAuthURL>\(.*\)</STSAuthURL>.*:\1:p' ${TMP}/O365_response_step_0`

#Create input for step 1

File: O365_request_step_1-1

<?xml version="1.0" encoding="UTF-8"?>

<s:Envelope

xmlns:s="http://www.w3.org/2003/05/soap-envelope"

xmlns:wsse="http://docs.oasis-open.org/wss/2004/01/oasis-200401-wss-wssecurity-secext-1.0.xsd"

xmlns:saml="urn:oasis:names:tc:SAML:1.0:assertion"

xmlns:wsp="http://schemas.xmlsoap.org/ws/2004/09/policy"

xmlns:wsu="http://docs.oasis-open.org/wss/2004/01/oasis-200401-wss-wssecurity-utility-1.0.xsd"

xmlns:wsa="http://www.w3.org/2005/08/addressing"

xmlns:wssc="http://schemas.xmlsoap.org/ws/2005/02/sc"

xmlns:wst="http://schemas.xmlsoap.org/ws/2005/02/trust">

<s:Header>

<wsa:Action s:mustUnderstand="1">http://schemas.xmlsoap.org/ws/2005/02/trust/RST/Issue</wsa:Action>

<wsa:To s:mustUnderstand="1">https://sts.<tenant>.com/adfs/services/trust/2005/usernamemixed</wsa:To>

<wsa:MessageID>b07da3ec-9824-46a5-a102-2329e0c5f63f</wsa:MessageID>

<ps:AuthInfo

xmlns:ps="http://schemas.microsoft.com/Passport/SoapServices/PPCRL" Id="PPAuthInfo">

<ps:HostingApp>Managed IDCRL</ps:HostingApp>

<ps:BinaryVersion>6</ps:BinaryVersion>

<ps:UIVersion>1</ps:UIVersion>

<ps:Cookies></ps:Cookies>

<ps:RequestParams>AQAAAAIAAABsYwQAAAAxMDMz</ps:RequestParams>

</ps:AuthInfo>

<wsse:Security>

<wsse:UsernameToken wsu:Id="user">

<wsse:Username>sa-curlAccount@<tenant>.com</wsse:Username>

<wsse:Password>*************</wsse:Password>

</wsse:UsernameToken>

<wsu:Timestamp Id="Timestamp">

File: O365_request_step_1-2

</wsu:Timestamp>

</wsse:Security>

</s:Header>

<s:Body>

<wst:RequestSecurityToken Id="RST0">

<wst:RequestType>http://schemas.xmlsoap.org/ws/2005/02/trust/Issue</wst:RequestType>

<wsp:AppliesTo>

<wsa:EndpointReference>

<wsa:Address>urn:federation:MicrosoftOnline</wsa:Address>

</wsa:EndpointReference>

</wsp:AppliesTo>

<wst:KeyType>http://schemas.xmlsoap.org/ws/2005/05/identity/NoProofKey</wst:KeyType>

</wst:RequestSecurityToken>

</s:Body>

</s:Envelope>

cat ${TMP}/O365_request_step_1-1 > ${TMP}/O365_request_step_1

echo "<wsu:Created>`date -u +'%Y-%m-%dT%H:%M:%SZ'`</wsu:Created>" >> ${TMP}/O365_request_step_1

echo "<wsu:Expires>`date -u +'%Y-%m-%dT%H:%M:%SZ' --date='-15 minutes ago'`</wsu:Expires>" >> ${TMP}/O365_request_step_1

cat ${TMP}/O365_request_step_1-2 >> ${TMP}/O365_request_step_1

#1.b. Step 1: request SAML:assertion from the identified custom STS for account identified by

# credentials (internal datacenter, without webproxy to outside)

curl -X POST -H "Content-Type: application/soap+xml; charset=utf-8" -d "@${TMP}/O365_request_step_1" ${STSURL} -w "\n" > ${TMP}/O365_response_step_1

#Extract requested SAML:assertion from response step 1

sed 's/^.*\(<saml:Assertion.*saml:Assertion>\).*$/\1/' ${TMP}/O365_response_step_1 > ${TMP}/O365_response_step_1.tmp

#Create input for step 2

File: O365_request_step_2-1

<?xml version="1.0" encoding="UTF-8"?>

<S:Envelope

xmlns:S="http://www.w3.org/2003/05/soap-envelope"

xmlns:wsse="http://docs.oasis-open.org/wss/2004/01/oasis-200401-wss-wssecurity-secext-1.0.xsd"

xmlns:wsp="http://schemas.xmlsoap.org/ws/2004/09/policy"

xmlns:wsu="http://docs.oasis-open.org/wss/2004/01/oasis-200401-wss-wssecurity-utility-1.0.xsd"

xmlns:wsa="http://www.w3.org/2005/08/addressing"

xmlns:wst="http://schemas.xmlsoap.org/ws/2005/02/trust">

<S:Header>

<wsa:Action S:mustUnderstand="1">http://schemas.xmlsoap.org/ws/2005/02/trust/RST/Issue</wsa:Action>

<wsa:To S:mustUnderstand="1">https://login.microsoftonline.com/rst2.srf</wsa:To>

<ps:AuthInfo

xmlns:ps="http://schemas.microsoft.com/LiveID/SoapServices/v1" Id="PPAuthInfo">

<ps:BinaryVersion>5</ps:BinaryVersion>

<ps:HostingApp>Managed IDCRL</ps:HostingApp>

</ps:AuthInfo>

<wsse:Security>

File: O365_request_step_2-2

</wsse:Security>

</S:Header>

<S:Body>

<wst:RequestSecurityToken xmlns:wst="http://schemas.xmlsoap.org/ws/2005/02/trust" Id="RST0">

<wst:RequestType>http://schemas.xmlsoap.org/ws/2005/02/trust/Issue</wst:RequestType>

<wsp:AppliesTo>

<wsa:EndpointReference>

<wsa:Address>sharepoint.com</wsa:Address>

</wsa:EndpointReference>

</wsp:AppliesTo>

<wsp:PolicyReference URI="MBI"></wsp:PolicyReference>

</wst:RequestSecurityToken>

</S:Body>

</S:Envelope>

cat ${TMP}/O365_request_step_2-1 > ${TMP}/O365_request_step_2

cat ${TMP}/O365_response_step_1.tmp >> ${TMP}/O365_request_step_2

cat ${TMP}/O365_request_step_2-2 >> ${TMP}/O365_request_step_2

rm ${TMP}/O365_response_step_1.tmp

#1.c. Step 2: use the SAML:assertion to request binary security token from Office 365

# (outside datacenter, with proxy)

curl -U ${ProxyAccount}:${ProxyPassword} -k -x ${ProxyProtocol}://${ProxyServer}:${ProxyPort} -X POST -H "Content-Type: application/soap+xml; charset=utf-8" -d "@${TMP}/O365_request_step_2" https://login.microsoftonline.com/RST2.srf -w "\n" > ${TMP}/O365_response_step_2

#Extract requested binary security token from response step 2

sed 's/^.*\(<wsse:BinarySecurityToken.*wsse:BinarySecurityToken>\).*$/\1/' ${TMP}/O365_response_step_2 > ${TMP}/O365_response_step_2.tmp

#Create input for step 3

cat ${TMP}/O365_response_step_2.tmp | cut -d'>' -f2 | cut -d'<' -f1 > ${TMP}/O365_request_step_3

BinarySecurityToken=`cat ${TMP}/O365_request_step_3`

rm ${TMP}/O365_response_step_2.tmp

#1.d. Step 3: use the binary security token to retrieve the authentication cookie (outside

# datacenter, need to pass webproxy)

curl -v -U ${ProxyAccount}:${ProxyPassword} -k -x ${ProxyProtocol}://${ProxyServer}:${ProxyPort} -X GET -H "Authorization: BPOSIDCRL ${BinarySecurityToken}" -H "X-IDCRL_ACCEPTED: t" -H "User-Agent:" ${SharePointOnlineTenant}/_vti_bin/idcrl.svc/ > ${TMP}/O365_response_step_3 2>&1

#Remove DOS ^M from response step 3

cat ${TMP}/O365_response_step_3 | sed 's/^M//' > ${TMP}/O365_response_step_3.tmp

#Extract requested authentication cookie from response step 3 and create input for step 4

echo "Set-Cookie: SPOIDCRL=`cat ${TMP}/O365_response_step_3.tmp | grep Set-Cookie | awk -F'SPOIDCRL=' '{print $2}'`" > ${TMP}/O365_request_step_4

rm ${TMP}/O365_response_step_3.tmp

#2. Step 4: Use that Office 365 authentication cookie in subsequent webservice requests to

# SharePoint Online REST API (outside datacenter, with proxy)

curl -U ${ProxyAccount}:${ProxyPassword} -k -x ${ProxyProtocol}://${ProxyServer}:${ProxyPort} -b ${TMP}/O365_request_step_4 -T "{${OUTPUT}/${UploadFile}}" ${UploadLocation}

exit 0

curl -U ${ProxyAccount}:${ProxyPassword} -k -x ${ProxyProtocol}://${ProxyServer}:${ProxyPort} -X POST -H "Accept: application/json;odata=verbose" -d "" ${SharePointOnlineTenant}/_api/contextinfo > ${TMP}/O365_response_step_4_tmp

FormDigest=`sed -n 's:.*FormDigestvalue:\(.*\),.*:\1:p' ${TMP}/O365_response_step_4_tmp`

rm ${TMP}/O365_response_step_4.tmp

curl -U ${ProxyAccount}:${ProxyPassword} -k -x ${ProxyProtocol}://${ProxyServer}:${ProxyPort} -X POST -H "X-RequestDigest: @${FormDigest}; X-HTTP-Method: PUT” --data-binary "{${OUTPUT}/${UploadFile}}" ${SharePointOnlineTenant}/teams/siteX/_api/web/GetFileByServerRelativeUrl('Shared%20Documents/SubFolder/${UploadFile}')/Files/$value

Friday, May 11, 2018

How-to resolve peculiarity with .aspx file upload from automated client context

Tuesday, April 17, 2018

Peculiarity with Active Authentication issues from VBA

Private Declare PtrSafe Function CoCreateGuid Lib "ole32.dll" (guid As GUID_TYPE) As LongPtr

Private Declare PtrSafe Function StringFromGUID2 Lib "ole32.dll" (guid As GUID_TYPE, ByVal lpStrGuid As LongPtr, ByVal cbMax As Long) As LongPtr

Private Function GetO365SPO_SAMLAssertionIntegrated() As String

Dim CustomStsUrl As String, CustomStsSAMLRequest, stsMessage As String

CustomStsUrl = "https://sts.<tenant>.com/adfs/services/trust/2005/windowstransport"

CustomStsSAMLRequest = "<?xml version=""1.0"" encoding=""UTF-8""?><s:Envelope xmlns:s=""http://www.w3.org/2003/05/soap-envelope"" xmlns:a=""http://www.w3.org/2005/08/addressing"">" & _

"<s:Heade>" & _

"<a:Action s:mustUnderstand=""1"">http://schemas.xmlsoap.org/ws/2005/02/trust/RST/Issue</a:Action>" & _

"<a:MessageID>urn:uuid:[[messageID]]</a:MessageID>" & _

"<a:ReplyTo><a:Address>http://www.w3.org/2005/08/addressing/anonymous;</a:Address>;</a:ReplyTo>" & _

"<a:To s:mustUnderstand=""1"">[[mustUnderstand]];</a:To>" & _

"</s:Header>"

CustomStsSAMLRequest = CustomStsSAMLRequest & _

"<s:Body>" & _

"<t:RequestSecurityToken xmlns:t=""http://schemas.xmlsoap.org/ws/2005/02/trust"">" & _

"<wsp:AppliesTo xmlns:wsp=""http://schemas.xmlsoap.org/ws/2004/09/policy"">" & _

"<wsa:EndpointReference xmlns:wsa=""http://www.w3.org/2005/08/addressing"">" & _

"<wsa:Address>urn:federation:MicrosoftOnline</wsa:Address>;</wsa:EndpointReference>" & _

"</wsp:AppliesTo>" & _

"<t:KeyType>http://schemas.xmlsoap.org/ws/2005/05/identity/NoProofKey;</t:KeyType>" & _

"<t:RequestType>http://schemas.xmlsoap.org/ws/2005/02/trust/Issue;</t:RequestType>" & _

"</t:RequestSecurityToken>" & _

"</s:Body>" & _

"</s:Envelope>"

stsMessage = Replace(CustomStsSAMLRequest, "[[messageID]]", Mid(O365SPO_CreateGuidString(), 2, 36))

stsMessage = Replace(stsMessage, "[[mustUnderstand]]", CustomStsUrl)

' Create HTTP Object ==> make sure to use "MSXML2.XMLHTTP" iso "MSXML2.ServerXMLHTTP.6.0"; as the latter does not send the NTLM

' credentials as Authorization header.

Dim Request As Object

Set Request = CreateObject("MSXML2.XMLHTTP")

' Get SAML:assertion

Request.Open "POST", CustomStsUrl, False

Request.setRequestHeader "Content-Type", "application/soap+xml; charset=utf-8"

Request.send (stsMessage)

If Request.Status = 200 Then

GetO365SPO_SAMLAssertionIntegrated = O365SPO_ExtractXmlNode(Request.responseText, "saml:Assertion", False)

End If

End Function

Private Function O365SPO_ExtractXmlNode(xml As String, name As String, valueOnly As Boolean) As String

Dim nodeValue As String

nodeValue = Mid(xml, InStr(xml, "<" & name))

If valueOnly Then

nodeValue = Mid(nodeValue, InStr(nodeValue, ">") + 1)

O365SPO_ExtractXmlNode = Left(nodeValue, InStr(nodeValue, "</" & name) - 1)

Else

O365SPO_ExtractXmlNode = Left(nodeValue, InStr(nodeValue, "lt;/" & name) + Len(name) + 2)

End If

End Function

Private Function O365SPO_CreateGuidString()

Dim guid As GUID_TYPE

Dim strGuid As String

Dim retValue As LongPtr

Const guidLength As Long = 39 'registry GUID format with null terminator {xxxxxxxx-xxxx-xxxx-xxxx-xxxxxxxxxxxx}

retValue = CoCreateGuid(guid)

If retValue = 0 Then

strGuid = String$(guidLength, vbNullChar)

retValue = StringFromGUID2(guid, StrPtr(strGuid), guidLength)

If retValue = guidLength Then

' valid GUID as a string

O365SPO_CreateGuidString = strGuid

End If

End If

End Function

Sunday, April 15, 2018

Peculiarity with SharePoint Online Active Authentication

- Via OAuth 2.0; this requires to administer an SharePoint Add-In as endpoint (see post Access SharePoint Online using Postman for an outline of this approach)

- Via SAML2.0; against the STS of your tenant

Sunday, March 25, 2018

Optimize for bad-performing navigation in SharePoint Online

- Encapsulate all the code in it's own module + namespace, to isolate and separate from the anonymous global namespace

- On the fly load both jQuery and knockout.js libraries, if not yet loaded in the page context

- Made the script generic, so that it can directly be reused on multiple sites without need for code duplication (spread) and site-specific code changes; this also enables to distribute and load the script code from an own Content Delivery Network (CDN)

- Cache per site, and per user; so that the client-cache can be used on the same device for both multiple sites, as well as by different logged-on accounts (no need to switch between browsers, e.g. for testing)

- Display the 'selected' state in the navigation, also nested up to the root navigation node

- Display the actual name of of the rootweb of the sitecollection, iso the phrase 'Root'

- Extend the navigation with navigation nodes that are additional to the site hierarchy; and include them also in the navigation cache to avoid the need to retrieve again from SharePoint list per each page visit

- Hide from the navigation any navigation nodes that are identified as 'Hidden' (same as possible with the standard structural navigation)

- Execute the asynchronous 'get' retrievals parallel via 'Promise.all', to shorten the wait() time, and also for cleaner structured code

- Extend with one additional level in the navigation menu (this is accompanied with required change in the masterpage snippet)

- Include a capability to control via querystring to explicit refresh the navigation and bypass the browser cache; convenience in particular during development + first validation

- Extend with one additional level in the navigation menu (see above, this is accompanied by required change in the ViewModel code)

- Preserve the standard 'PlaceHolderTopNavBar', as some layout pages (e.g. Site Settings, SharePoint Designer Settings,...) expect that to be present, and give exception when missing from masterpage

- Optional: Restore the 'NavigateUp' functionality; via standard control that is still included in the standard 'seattle.master' (a source for this: Restore Navigate Up on SharePoint 2013 [and beyond])

Sunday, March 18, 2018

Beware: set site to readonly impacts the permission set overviews

- Set the lock status of source site to ‘readonly' (Lock or Unlock site collections)

- Enable a redirect from the root-url of the source site to the root-url of the target migrated site (This is a real success and much appreciated by our end-users: as it is almost impossible for each to remember to update own bookmarks to the sites now migrated. Definitely a best practice I recommend to everyone doing a migration to SharePoint Online !!)

- Send out communication to the site owner that his/her site is migrated. Migration issues that were not identified during the User Acceptance Test (UAT) of the migration will be handled as after-care

Monday, March 5, 2018

Users need 'Use Client Integration Features' permission to launch OneDrive Sync from SharePoint Online library

Wednesday, February 21, 2018

Migrated SharePoint 2010 workflow cannot be opened Online in SharePoint Designer 2013

- Disable usage of 'cache' capability in SharePoint Designer 2013: it will then no longer try to load + reuse the cached files that were initially created on your workstation by opening the workflow via SharePoint Designer 2010

- Cleanup the local cache to remove the SharePoint 2010 versions of the cached workflow files: delete all cached files from these local locations (Resource: SharePoint Designer cannot display the item (SharePoint 2013))

- C:\Users\<UserName>\AppData\Roaming\Microsoft\SharePoint Designer\ProxyAssembleCache

- C:\Users\<UserName>\AppData\Roaming\Microsoft\Web Server Extensions\Cache

- C:\Users\<UserName>\AppData\Local\Microsoft\WebsiteCache

- (Get yourself a new / other laptop:) Open the workflow in SharePoint Designer on another workstation, on which the workflow was not managed previously via SharePoint Designer 2010 when still on SharePoint 2010

Monday, February 12, 2018

PowerShell to assess the external access authorization per site

<# .SYNOPSIS Access Review of guest users into the SharePoint tenant #> #Connection to SharePoint Online $SPOAdminSiteUrl="https://<tenant>-admin.sharepoint.com/" try { Connect-SPOService -Url $SPOAdminSiteUrl -ErrorAction Stop } catch { exit } $externalUsersInfoDictionary= @{} $externalSharedSites = Get-SPOSite | Where-Object {$_.SharingCapability -eq "ExistingExternalUserSharingOnly"} foreach ($site in $externalSharedSites) { $externalUsersInfoCollection= @() $position = 0 $page = 0 $pageSize = 50 while ($position -eq $page * $pageSize) { foreach ($externalUser in Get-SPOExternalUser -Position ($page * $pageSize) -PageSize $pageSize -SiteUrl $site.Url | Select DisplayName,Email,WhenCreated) { if (!$externalUsersInfoDictionary.ContainsKey($externalUser.Email)) { $externalUsersInfoDictionary[$externalUser.Email] = @() } $externalUsersInfoDictionary[$externalUser.Email]+=$site.Url $externalUsersInfo = new-object psobject $externalUsersInfo | add-member noteproperty -name "Site Url" -value $site.Url $externalUsersInfo | add-member noteproperty -name "Email" -value $externalUser.Email $externalUsersInfo | add-member noteproperty -name "DisplayName" -value $externalUser.DisplayName $externalUsersInfo | add-member noteproperty -name "WhenCreated" -value $externalUser.WhenCreated $externalUsersInfo | add-member noteproperty -name "Preserve Access?" -value "Yes" $externalUsersInfoCollection+=$externalUsersInfo $position++ } $page++ } if ($externalUsersInfoCollection.Count -ne 0) { $exportFile = "External Access Review (" + $site.Url.SubString($site.Url.LastIndexOf("/")+ 1) + ")- " + $(get-date -f yyyy-MM-dd) + ".csv" $externalUsersInfoCollection | Export-Csv $exportFile -NoTypeInformation } } # Export matrix overview: per user, in which of the external sites granted access $externalUsersInfoCollection= @() $externalUsersInfoDictionary.Keys | ForEach-Object { $externalUsersInfo = new-object psobject $externalUsersInfo | add-member noteproperty -name "User Email" -value $_ foreach ($site in $externalSharedSites) { if ($externalUsersInfoDictionary[$_].Contains($site.Url)) { $externalUsersInfo | add-member noteproperty -name $site.Url -value "X" } else { $externalUsersInfo | add-member noteproperty -name $site.Url -value "" } } $externalUsersInfoCollection+=$externalUsersInfo } $exportFile = "External Access Review user X site - " + $(get-date -f yyyy-MM-dd) + ".csv" $externalUsersInfoCollection | Export-Csv $exportFile -NoTypeInformation Disconnect-SPOService

Friday, February 9, 2018

Azure AD Access Review yet useless for SharePoint External Sharing

- Assess on Azure AD Group Membership

- Assess on access to an Office 365 application

- In the review mode on 'O365 SharePoint Online as application'; I get no results at all.

- In the review mode on 'Group Membership' I selected the dynamic group that includes all guest accounts. With this review mode I do get results to review their access. But the value is limited to gain insight on last logon per guest account. You can then as reviewer make a decision to Approve or Deny the continued group membership. But in reality this review decision cannot be effectuated: the group membership is dynamic, based on condition; not on concrete addition to the group.

I reported my 'negative' evaluation as feedback to a contact in the Azure AD productgroup: "I question how it would be applied: removing the 'refused' accounts from the Dynamic Group does not make sense; they should be blocked or removed from Azure AD to block access. Also, as a site owner only wants to take responsibility for access to his/her site, the access decision application should be applied there. Not on tenant level."

His response: "I think you have some interesting use cases. As the product is still in preview, documentation is limited. I will discuss your use cases with my colleagues in Redmond responsible for Access Reviews."

In addition, I also submitted a SharePoint uservoice idea: Azure AD access review on level of single (shared) site collection